Making Machines Think Like Humans

November 19, 2018

- Author: Murray Slovick Contributing Author

November/December 2018

More articles in this issue:

Right at the start, I want to invoke a temporary restraining order: Nowhere in this column will I use the phrase “machines will never think like humans.” Once upon a time no one thought humans would ever fly in a machine, so I will be careful about saying what machines can do.

With that disclaimer, even with the advent of artificial intelligence (AI), computer programs that think like humans are far beyond where we are now. Today’s AIs are powerful and skilled machines (or chips) that can “learn” in new ways. The obvious evidence of the improved powers of AI are gadgets like smart speakers with virtual assistants, being able to unlock your iPhone via face recognition, online apps that anticipate your next purchase and upcoming self-driving vehicles.

We’ve also watched IBM’s Watson defeat human contestants on the game show “Jeopardy,” and Google’s AlphaGo beat the world’s best players of the complex Chinese board game Go.

AI Breakthroughs

Machine learning involves training computers to perform tasks based on examples, rather than solely on programming. Deep learning has made this approach more powerful by using artificial neural networks that loosely mimic how our brain cells work, forming adjustable connections between different network parts. The machine can then learn from its experience and build up an ability to interpret similar data in the future.

But even after deep learning, an AI computer still must gather facts about a situation through sensors, compare this information to its stored data, run through possible actions, choose the action likely to be successful, and finally “remember” to replicate this action the next time it encounters the situation.

The difficulty is that all the impressive progress we’ve made to date is mostly due to learning systems that take advantage of large quantities of human-provided data. The main obstacle we face in duplicating human learning is how to get machines to learn in an unsupervised manner. They need to become more adaptable. We eventually want machines that can learn a new skill from just one or two examples, duplicating the human ability to work successfully in entirely new situations.

Supervised learning is not how humans learn. Children can learn just by observation. Teachers don’t tell a child “this is water and water is wet.” They experience it for themselves and know it with certainty after just one trial.

In 1950, Alan Turing, a computing pioneer, conceived a test to measure the progress of a computer exhibiting intelligent behavior equivalent to, or indistinguishable from, that of a human. The Turing test works like this: One of two partners in a conversation is a machine. A person communicates with both the machine and human via text on a screen and must guess whether the typed responses are being written by the human or the computer. The more often the AI is mistaken for a human, the better it is. When the human cannot reliably tell the machine from the other human, the machine has passed the test.

Part of the difficulty of developing truly intelligent machines is that we don’t understand how natural intelligence works. Our brain contains billions of neurons and we learn by establishing electrical connections between different neurons. But we don’t know exactly how these connections add up to achieve higher reasoning, not to mention inference, instinct and self-awareness.

Machine reasoning will improve as we develop systems that continuously sense, interact and learn from the world. But building a machine that can replicate key facets of human thinking will take time.

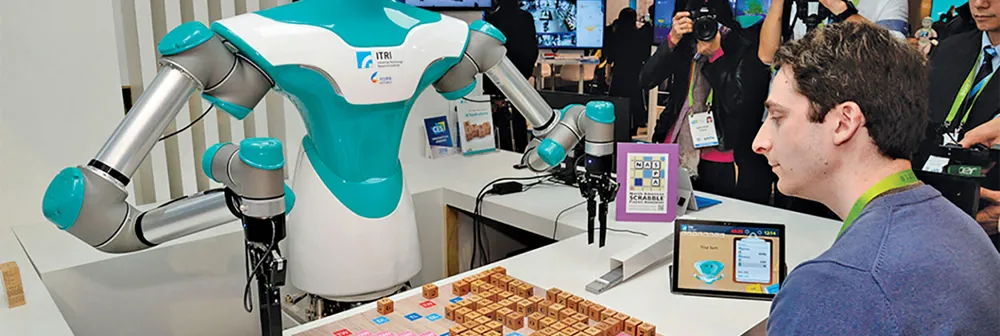

See the latest in AI, robotics and the smart home at CES 2019

Join our community of innovators and shape the future of technology.