Display to Gameplay: Using AI to Power Next-Generation Gaming Technologies

July 23, 2025

- Author: CTA Staff

Fifty years have passed since Atari’s “Pong” Home console version debuted at CES 1975. Since then, video games have emerged as one of the defining forms of consumer technology, bridging television entertainment with interactivity, while transporting players to new worlds and offering unique forms of storytelling.

According to CTA’s 2024 U.S. Future of Gaming research report, there are an estimated 156.1 million gamers 13 years or older, representing 55% of the U.S. population. Among these gamers, interest in high performance gaming displays has strong potential for growth. While only 32% of gamers say their current display supports HDMI 2.1, 39% say their next TV or monitor will include this feature, signaling a shift towards displays with high-performance gaming in mind. HDMI 2.1 enables key features like 4K resolution at 120Hz or 8K at 60Hz, which support smoother and more detailed gameplay. Similarly, 34% of gamers intend to purchase displays that support refresh rates 144Hz and above, an increase from 30% of currently owned displays.

As gamer preferences continue to evolve, so does the technology that powers play – especially the video technology at the heart of the experience. The newest televisions and monitors are being enhanced by AI, rapidly transforming how developers, manufacturers and players approach the future of gaming.

These advances touch picture quality, frame smoothness and performance, and even adaptivity based on user behavior. Together, they mark a new era of smarter, more responsive display technology.

Picture Quality

One of the most visible ways AI is enhancing the gaming experience is through the rise of AI-based super resolution technologies. These solutions, often powered by a system’s graphics processing unit (GPU), render games at lower native resolutions while using AI models to deliver upscaled, high-fidelity visuals on screen.

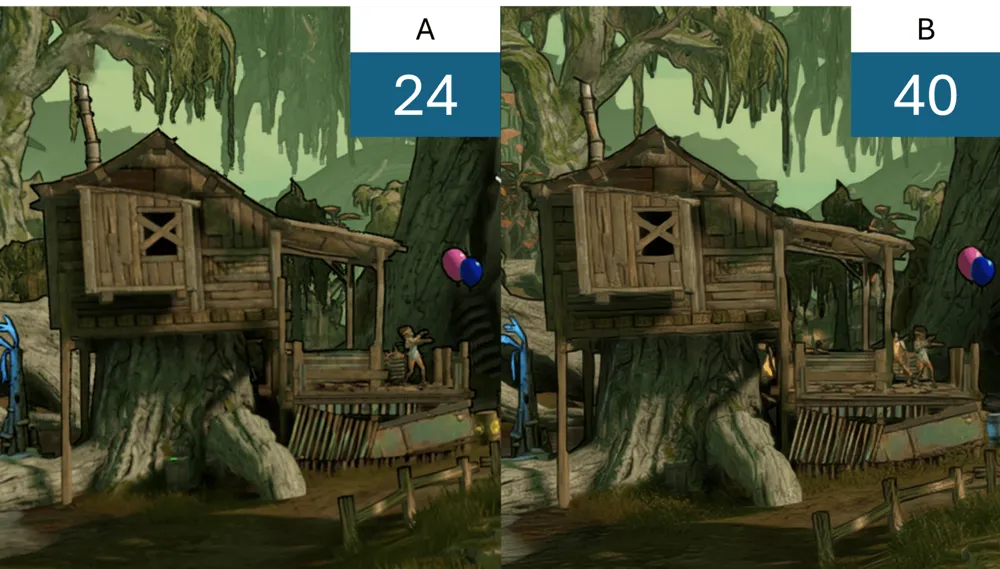

Microsoft’s Automatic Super Resolution (Auto SR) is the first operating system-integrated AI upscaling feature designed specifically for gaming. Built into Windows 11 through DirectX, Auto SR uses a trained neural network to upscale supported games in real time, delivering enhanced visuals and helping maintain smoother performance. In their DirectX Developer Blog, Microsoft highlights a side-by-side comparison from Borderlands 3: one rendered natively at 1440p running at 24 frames per second, and another rendered at 720p and upscaled via Auto SR – nearly doubling the frame rate.

Meanwhile, Sony has introduced its own AI upscaling solution, PlayStation Spectral Super Resolution (PSSR), debuting with the PS5 Pro in 2024. PSSR is designed to deliver enhanced resolutions for select titles by using AI to upscale frames with greater detail and efficiency, boosting visual quality without compromising performance.

Polyphony Digital has applied PSSR to improve the visual fidelity of Gran Turismo 7. According to Shuichi Takano, main programmer at Polyphony Digital, the PS5 Pro’s increased resolution and performance have enabled the team to model cars and tracks with greater precision than before. The game features experimental support for 8K resolution at 60 frames per second, offering players a more immersive racing experience supported by real-time AI upscaling.

These enhancements show how AI is being used to support high-resolution output, enabling developers to deliver rich detail without sacrificing player performance. Alongside others across the industry, they signal a future where upscaling becomes intelligent and adaptive, reducing barriers to premium visual experiences and allowing more consumers to enjoy high-end gameplay on a wider range of systems and displays.

Enhanced Gameplay

Perceived high framerates and smooth motion are critical for immersive play. Whether navigating fast-paced action or exploring expansive open worlds, a consistent and fluid visual experience can have a direct impact on how players engage with a game.

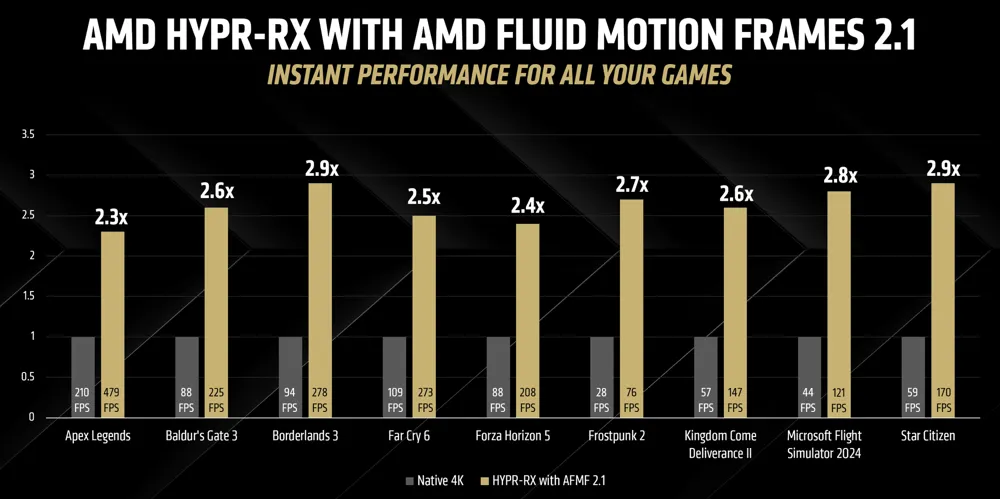

AMD’s HYPR-RX feature suite includes Fluid Motion Frames (AFMF), a frame generation technology that improves perceived framerate without increasing the GPU’s workload. Integrated at the driver level and supported on select AMD Radeon graphics cards, this technology works by generating additional frames between those rendered by the game. The result is significantly smoother motion, particularly noticeable on displays with higher refresh rates. AFMF Version 2.1 also enables improvements in ghosting reduction and the preservation of text overlays and fine detail.

To learn more about AMD Fluid Motion Frames and other AI features, visit AMD’s Community Blog.

For players, this translates to more fluid gameplay, even without the most powerful hardware. For developers, it offers a hardware-supported way to increase perceived framerates without lowering resolution or scaling back visual detail. As frame generation technologies become more widely adopted, they offer a new tool for optimizing performance and improving how games look across devices.

User Experience

Beyond resolution and framerates, the wider user experience plays a critical role in how immersive gaming and entertainment feel. With the integration of AI into consumer displays, manufacturers like Samsung and LG are enabling TVs to adapt automatically to the content being played. For players, this reduces the need for manual adjustments and allows for more seamless, uninterrupted gameplay.

Samsung’s AI Auto Game Mode is designed to optimize display settings when a console or gaming device is detected. Compatible Samsung TVs can adjust picture and audio parameters — such as brightness, contrast and input delay — based on the gameplay context. This allows players to switch between game genres without having to manually reconfigure their display, helping them maintain consistent performance and visual clarity across different titles.

LG’s Game Optimizer offers a centralized interface for adjusting key picture and latency settings during gameplay. It includes quick access to features like Variable Refresh Rate (VRR), Auto Low Latency Mode (ALLM) and High Dynamic Range (HDR) tone mapping. In addition, AI Game Sound adjusts audio output automatically when gaming is detected, enhancing clarity and spatial effects. These features are active during gameplay and designed to reduce friction, allowing players to fine-tune their experience without navigating deep menus or interrupting their game.

By embedding context-aware optimization into their TVs, manufacturers are enabling displays to respond more intelligently to gameplay scenarios. These tools reflect a broader trend toward adaptive display technologies, where AI contributes not only to image and sound quality, but to a more intuitive and efficient gaming experience overall.

Beyond resolution and framerates, the wider user experience plays a critical role in how immersive gaming and entertainment feel. With the integration of AI into consumer displays, manufacturers like Samsung and LG are enabling TVs to adapt automatically to the content being played. For players, this reduces the need for manual adjustments and allows for more seamless, uninterrupted gameplay.

Collaboration is Key

As gaming continues to shape the future of television and display innovation, AI is unlocking new levels of performance, adaptability and immersion, bringing high-end experiences to a broader range of consumers. These advancements are driven by collaboration across the ecosystem, including game developers, hardware and display manufacturers, chipset providers and entertainment platforms.

Through the work of CTA, leading brands and innovators come together to share insights, define standards and drive progress that benefits the entire industry. That collaboration will be on full display at CES® 2026, the world’s most powerful technology event and a gathering place for the global gaming industry. From product launches to cross-sector conversations, CES remains a critical platform for showcasing the future of interactive entertainment.

Want to Shape the Future of Entertainment?

Join CTA’s Video Promotions Working Group, Gaming Working Group or Content and Entertainment Council to help define the next generation of immersive entertainment.

Contact Eamon Martin at emartin@cta.tech to learn more.

Join our community of innovators and shape the future of technology.